Ensembling is a machine learning technique of combining multiple models to improve the accuracy and stability of the predictions. While there are many ensemble methods out there, bagging, boosting, and stacking are the three most commonly used methods in various domains. Today in this article, we’ll explore the differences between Bagging, Boosting, and Stacking and the possibility of combining Bagging and Boosting to create more powerful ensemble models.

So, let’s start without any further ado!

Bagging

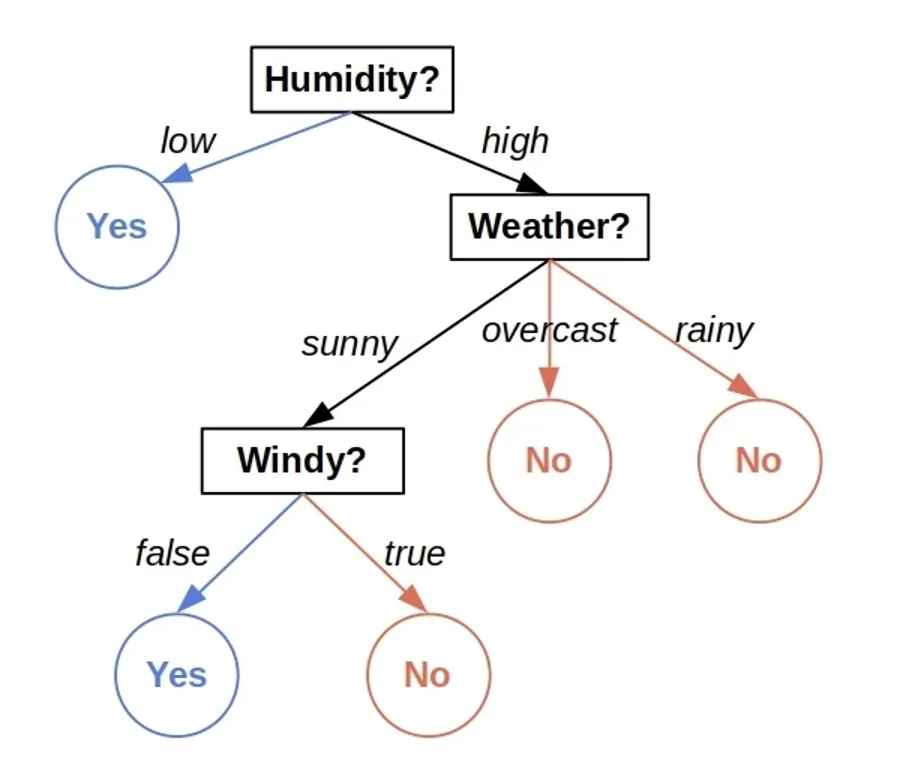

Bagging, also known as Bootstrap Aggregating, is an ensembling technique used to improve the accuracy and stability of a predictive model. It works by creating multiple models using bootstrapped samples of the training data. The comparatively weaker models are taken as base learners and are trained independently, and then their predictions are combined using averaging or voting to make the final, more accurate prediction.

The basic idea behind bagging is to reduce variance, ultimately reducing overfitting by creating multiple models on slightly different samples of the same data, improving the stability of predictions, and helping to reduce the impact of any outliers or noisy data points in the original dataset.

However, bagging is only suitable for some types of problems. For example, if working with a small data set, bagging may not improve performance significantly, and it may be better to use a simpler model. In addition, bagging may not work well if the models are highly correlated, as in the case of a linear regression model.

Bagging is commonly used with decision trees, and the resulting ensemble model is called a Random Forest. Random Forests are popular in machine learning because they are robust, easy to use, and can handle high-dimensional data.

Boosting

Boosting is an ensemble technique which is specifically used to tackle high bias in machine learning models, leading to reduced errors in predictions. The predictive accuracy of weaker learners is improved substantially by combining them into a more robust model. This approach involves training weaker models and then merging them into stronger models through an iterative processes.

Models that perform slightly better than randomly guessing are trained in a sequence on the same data set. The algorithm being utilized then assigns higher weights to misclassified data points and introduces a new weak model on this weighted dataset. This iterative process is repeated until the ensemble model’s performance reaches a level where no significant improvement can be made, or the model’s performance stops improving altogether.

Boosting algorithms can be divided into five types:

- AdaBoost works on the weighted principle by manipulating training samples in each model, correcting the error of the previous model.

- Gradient Boosting is used to build a robust ensemble classifier by combining weaker models to get a stronger one by summing the weighted predictions of all trees, excelling in regression and classification problems.

- XGBoost is a more homogenous form of Gradient Boosting. It uses advanced regularization methods and produces more accurate results than gradient boosting.

- LightGBM is a gradient boosting framework that uses tree-based learning algorithms.

- CatBoost is a boosting library which is used for regression and classification.

The benefit of boosting techniques is that they utilize a sequential method of combining multiple weak learners, which helps to improve observations iteratively. This strategy helps address the high bias in data that is often present in machine learning models.

Stacking

Last but not least, stacking is also an ensemble learning method that enhances the overall prediction accuracy by combining several models, improving classification and regression problems. It involves using the predictions of several base models as inputs to a higher-level model, also known as a meta-model, that aggregates the predictions into a final output. The term “stacking” is derived from the meta-model being stacked on top of the base models.

To use stacking, the data can be split into two parts,

- The base learners are trained in the first part.

- The second part is used to train the meta-model.

The base learners are utilized to make appropriate predictions on the second part of the training data, which is then used as input data for the meta-model. The meta-model can then be trained on the predictions of the base models. The meta-model’s purpose is to find the most optimal way to combine the predictions of base learners.

The advantage of stacking is that it combines multiple models to improve predictive performance by leveraging their unique strengths. Moreover, stacking is very flexible, allowing the use of different types of models and algorithms.

Bagging Vs Boosting Vs Stacking

Bagging reduces variance and overfitting by creating multiple models using bootstrapped samples, while boosting creates strong models iteratively by adjusting the training set’s distribution, and lastly, stacking combines the predictions of several base models to enhance the overall prediction accuracy. Bagging is effective in reducing overfitting, Boosting reduces bias, and Stacking combines the strengths of different models to improve overall performance.

Combining Bagging and Boosting

Bagging and Boosting are popular ensemble techniques that can be used together to create a stronger model, known as B&B. Boosting adjusts the training set’s distribution based on the performance of previously created classifiers, while bagging changes it randomly. Boosting assigns weights to the classifiers based on their performance, while bagging uses equal-weight voting. Boosting is better on clean data, but bagging is more robust on noisy data.

To leverage the strengths of both methods, bagging and boosting are combined using a sum voting technique. This ensemble approach is tested on standard benchmark datasets with various machine learning algorithms, including decision trees, rule learners, and Bayesian classifiers. This technique achieves the highest accuracy in most cases compared to simple bagging and boosting ensembles and other known ensembles.

Advantages of Combining Bagging and Boosting

The combination of Bagging and Boosting provides several advantages over using them individually:

- The model’s accuracy is improved by reducing bias and variance.

- Combining both methods allows for the utilization of their strengths, resulting in a model that is both more accurate and more resilient.

Disadvantages of Combining Bagging and Boosting

While the combination of Bagging and Boosting can provide significant advantages over using them individually, there are also some disadvantages to consider:

- B&B can be more complex to implement than other ensembling techniques because it requires multiple Bagging ensembles and a Boosting algorithm.

- Boosting can increase the risk of overfitting because it assigns more weight to the misclassified data points.

-

When Should I Use Ensemble Methods?

-

What is Ensemble Learning? Advantages and Disadvantages of Different Ensemble Models

-

Decision Trees Vs. Random Forests – What’s The Difference?

Wrap Up

Bagging, Boosting, and Stacking are popular ensembling techniques in Machine Learning used to improve predictive performance by combining multiple models. Bagging reduces variance and overfitting by creating various models using bootstrapped training data samples. Using boosting, weak models are iteratively trained, combining them into a single strong model to reduce high bias in data. Stacking involves using multiple base models to train a higher-level meta-model to improve overall predictive performance.

Combining Bagging and Boosting can create a more powerful ensemble model but can also increase the risk of overfitting. Each technique has advantages and disadvantages, and the choice depends on the specific problem you’re dealing with. So, make sure you don’t think of any ensemble technique as a one-fit-for-all and rather know the pros and cons of each so you can use them accordingly.