Have you ever spent hours fine-tuning a machine learning model, only to find that it falls apart when faced with new data? Or maybe you’ve tried training an algorithm on a variety of datasets, but no matter what you do, it just can’t seem to grasp the underlying patterns you’re trying to teach it. If so, don’t worry – you’re not alone. These frustrating scenarios are all too common in the world of machine learning, and they’re known as overfitting and underfitting.

Overfitting vs. Underfitting – What’s The Difference?

Overfitting and underfitting are two common problems in machine learning that occur when the model is either too complex or too simple to accurately represent the underlying data.

Overfitting happens when the model is too complex and learns the noise in the data, leading to poor performance on new, unseen data. On the other hand, underfitting happens when the model is too simple and cannot capture the patterns in the data, resulting in poor performance on both training and test datasets.

To avoid these issues, it is essential to understand the causes and precautions needed to be taken while building machine learning models. In this article, we’ll explore what overfitting and underfitting are and the various factors that contribute to them. We will also provide practical steps and strategies to avoid overfitting and underfitting, enabling you to build models that can generalize well on unseen data rather than simply memorizing patterns in the training data. So, let’s dive in and explore these concepts further!

By the end of this article, you’ll have a solid understanding of overfitting and underfitting, as well as the tools to build more effective machine learning models. So, let’s get started!

Overfitting – What is it & How Does it Occur

Overfitting is defined as when the algorithm becomes too focused on the nuances and noise of the training data rather than the underlying patterns that could be applied to new data. One way to understand overfitting is to think of it as memorization rather than learning. When a model overfits, it memorizes the training data, including the noise and errors, rather than learning the underlying patterns that can be applied to new data.

This can result in poor performance on new data, as the model may struggle to recognize patterns that are similar but not identical to those in the training data. In some cases, overfitting can even lead to spurious correlations or misleading insights, which can have serious consequences in a variety of applications where the model is deployed.

There are several reasons why an algorithm might overfit. One common cause is when the model is too complex or detail-oriented, meaning that it’s trying to capture every tiny aspect of the training data instead of focusing on the big picture. Another cause is when the model is too complex for the scenario. In these cases, it needs to be more flexible, allowing for more variations in the patterns it detects.

How do you know your model is overfitting?

It can be tricky to quickly identify whether your model is overfitting or not, especially if you don’t have a lot of experience dealing with overfitted models. So, here are some early signs of overfitting you may need to keep an eye on:

- If your model provides accurate results when you test it using your training dataset but fails to give precise equal results when you feed it with data from a separate validation dataset. This suggests that the model is memorizing the training data rather than generalizing to new scenarios.

- When your model has a high variance, it means that your model is too sensitive to the noise in your training dataset, so it won’t generalize well on the unseen data.

That’s just a brief overview of some common early signs of overfitting. In the next sections, we’ll dive deeper into the causes and consequences of overfitting, as well as strategies to help you avoid this common problem.

What causes overfitting, and how can you avoid it?

Let’s discuss some common causes of overfitting in detail and the steps you can take to avoid them:

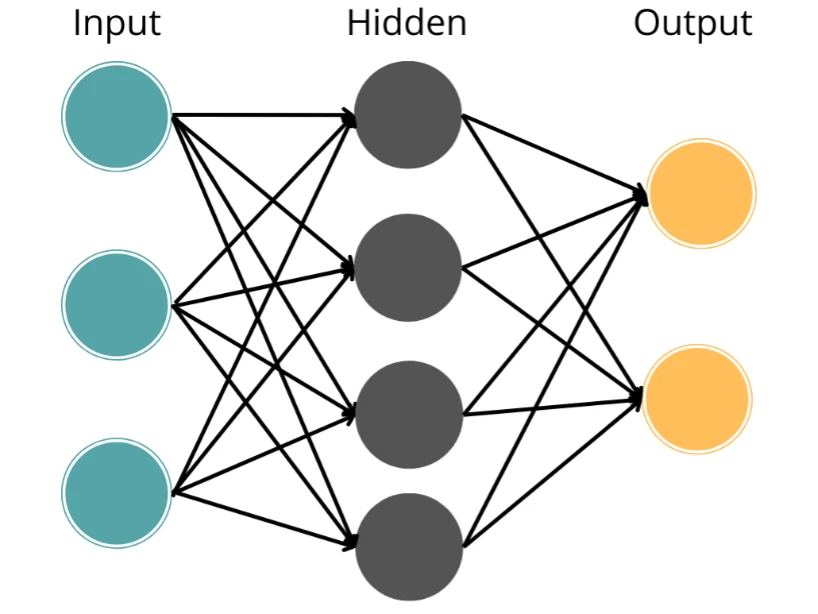

- Model Complexity:

Model complexity is a major contributor to overfitting, especially when working with smaller datasets. When a model becomes too complex, it focuses on the details and noise instead of the underlying patterns in the data, which can result in poor generalization to new data. This can be avoided by simplifying the model by reducing the number of hidden layers or parameters or by using techniques such as dimensionality reduction.

- Small Training Datasets:

When there isn’t enough data to train the model effectively, it memorizes the training data rather than learning the underlying patterns that can be applied to new data. To overcome this, you can augment the dataset by generating new versions of the data through techniques such as flipping, translating, or rotating the data.

- Noisy Data:

When there are too many “distractions” in your data, such as too much noise, missing values, outliers, and irrelevant material, the algorithm fixates on that instead. This can also occur if you iterate the same data through your algorithm too many times. To avoid this, you can make a new dataset to validate the results from your training set to see if it’s producing accurate results. You can also use Feature Selection to ensure the algorithm only focuses on selective data.

- Incorrect Use of Hyperparameters:

Incorrect use of hyperparameters can also lead to overfitting. For example, the number of layers or the size of the data chunks fed to the algorithm can significantly impact the results. It is important to set these parameters carefully and tune them appropriately to avoid overfitting. Techniques such as grid search, random search, or Bayesian optimization can be used to select appropriate parameters.

- Regularization:

Finally, no regularization can also lead to overfitting. Regularization is a way of adding constraints to the algorithm to prevent it from becoming too complex and generating patterns that are specific to the training data. Techniques such as L1 or L2 regularization, early stopping, or dropout can be used to ensure the model remains well-generalized and effective for new data.

-

How To Use Ensemble Learning Techniques? Explained in Plain Terms!

-

When Should I Use Ensemble Methods?

-

Top 12 Machine Learning Algorithms

Underfitting – What is it & How Does it Occur

Underfitting is when you dumb down your algorithm too much or make it too simple, resulting in difficulty for it to identify any real patterns in your data. This scenario is opposite to overfitting and happens when the model is unable to learn the relationships between the input features and the output variable. As a result, the model produces inaccurate results that have high bias and low variance.

For example, if you were to make a model that’s supposed to analyze linear data but then feed non-linear data to train it, it fails to identify the relations between the data points or learn any patterns from it.

Early detection of underfitting is essential to avoid wasting time and resources on a poorly performing model. Below are some of the most common ones you can look out for:

- When you’re training your model with the initial datasets, and it’s still producing erroneous results in terms of accuracy, let alone the results with the validation data, it mostly means that it is underfitting and hence not being able to learn enough from the data.

- The model parameters being used are set to default or not adjusted according to the dataset.

- The validation loss is small; it means that the model is not learning from the data as it should be. When the model is underfitting, the loss function doesn’t converge, and hence the validation loss remains small.

What causes underfitting, and how can you avoid it?

Just like overfitting, let’s discuss some common causes of overfitting in detail, along with the steps you can take to avoid them:

- Insufficient Data:

If the dataset is too small or lacking in diversity, the model will not be able to learn enough to make accurate predictions. One way to address this is to gather more data or use techniques such as data augmentation to increase the variety of the data.

- Too Much Regularization:

Although regularization is a very valuable tool that helps make your model immune to overfitting, too much regularization can lead to underfitting. It is important to find the right balance between preventing overfitting and allowing the model to learn from the data.

- Simple models:

If the model is too simple, it may not be able to capture the complexity of the data. Increasing the model’s complexity by adding more layers or parameters can help it learn more intricate relationships between the features and the target variable.

- Inappropriate Hyperparameters:

Hyperparameters such as learning rate, batch size, and number of epochs can significantly affect the performance of the model. Choosing inappropriate values for these hyperparameters can lead to underfitting. Grid search or random search can be used to tune hyperparameters.

Overall, avoiding underfitting requires balancing simplicity and complexity, having enough data, and finding the right hyperparameters and regularization techniques.

Wrap Up

Overfitting and underfitting are common problems that can occur when building machine learning models. However, these issues can be overcome through proper experimentation, setting appropriate parameters, and finding the right balance between complexity and simplicity. By implementing the strategies and precautions outlined in this article, you can build models that accurately represent the underlying data and generalize well on unseen data.

Lastly, here’s a summary table for you to quickly compare overfitting and underfitting:

| Overfitting | Underfitting | |

| Cause | Model is too complex, fits noise in data | Model is too simple, cannot capture patterns in data |

| Training Set Performance | Low error, high accuracy | High error, low accuracy |

| Test Set Performance | High error, low accuracy | High error, low accuracy |

| Solution | Regularization, cross-validation, early stopping | Increase model complexity, add more data, tune hyperparameters |

| Precautions | Avoid over-engineering the model, use more data, perform regularization, use cross-validation, apply early stopping | Increase model complexity, use more data, avoid under-engineering the model, tune hyperparameters |